Wayfair’s Virtual Assistant

- Date

- 2020-2021

- Team

- Wayfair Customer Service

- Industry

- e-Commerce

- Platforms

- Web

In 2020, customer service at Wayfair sought to leverage machine learning (ML) to pilot a virtual assistant, a chatbot that would help cut down the cost of customer contacts for a company that ran call centers employing nearly 3,000 agents globally.

The department opted for a home grown solution, realizing our data was the most valuable piece of the technology recipe. I was lucky enough to join the team from the get go and help a group of about 10 analysts and product managers tag the very first training data set, a process that involved reading text messages customers sent to customer service and labeling them with the most appropriate resolution (or few) the business could provide. The intent prediction ML model trained with this data was codenamed Halo and it could take any string of text (“utterance”) and accurately predict what a customer is contacting us about.

Iteration & Testing

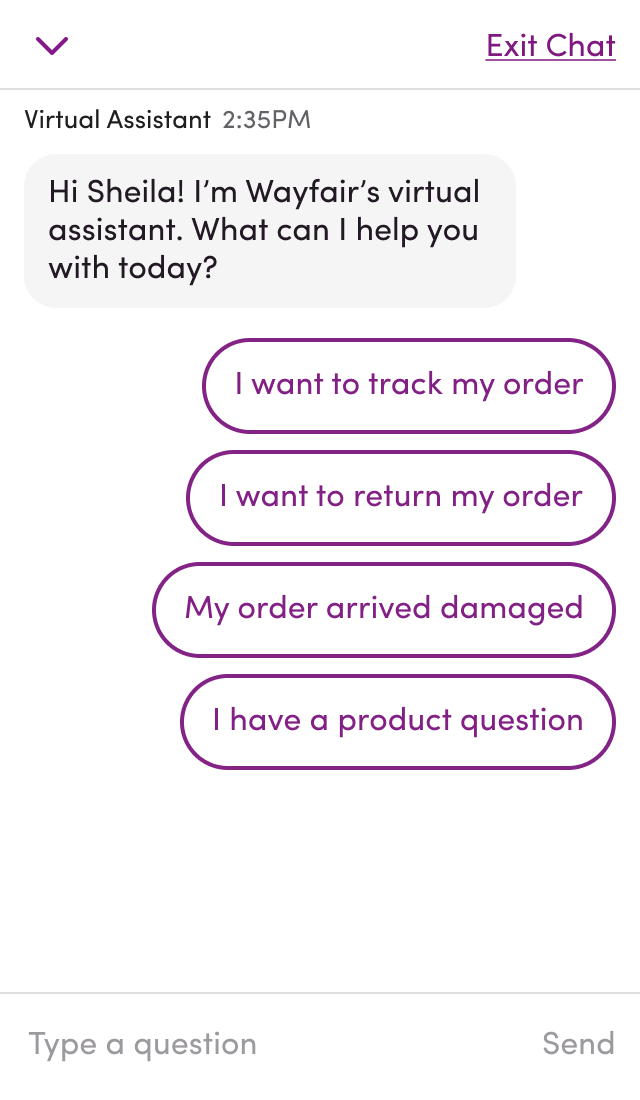

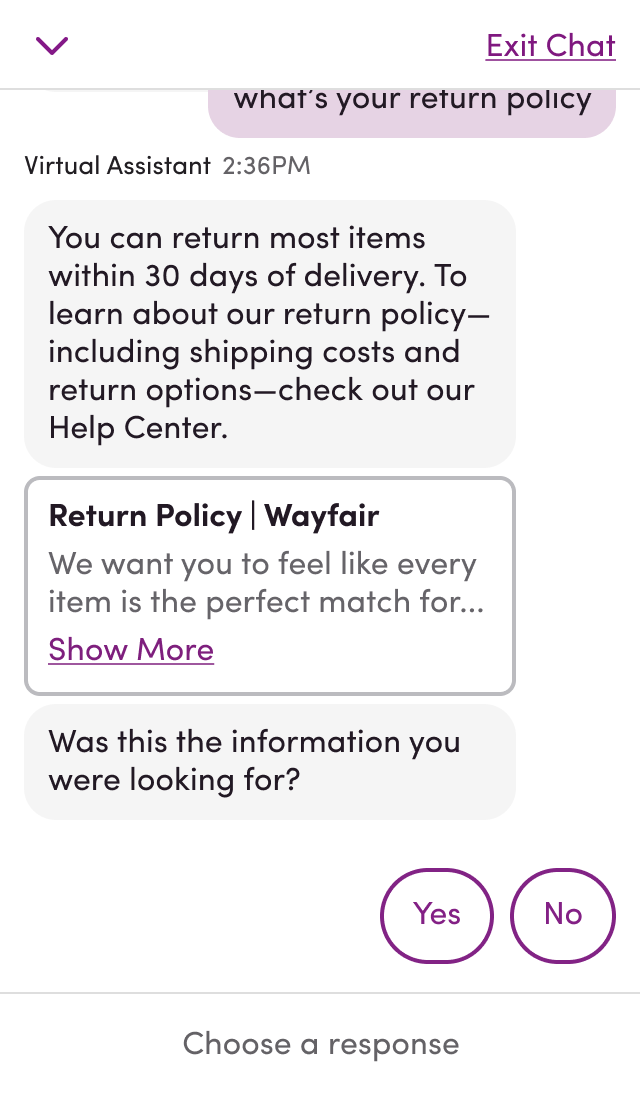

The team was looking to develop an MVP and test it with a small set of customers. To productize Halo’s magical and powerful intent prediction capability, I wire-framed a series of flows from multiple starting points. I leveraged UI components from both Wayfair’s extensive design system and our existing live chat application to specify behaviors and styling of message bubbles, buttons, status lines, etc. and designed a few new elements like the floating action button and corresponding popups that were a means of the discovery for the customer. I also coordinated content, conversational flow, and copy guidelines with Joe, a content strategist.

The MVP test surpassed our projections despite very tight constraints (like initially lacking the ability for a customer to select an item from their order history they were having a problem with) which we managed by setting up the test in creative ways. The test validated that our initial intent prediction accuracy was adequate (it would improve to nearly 90% in later months with much larger training sets). The test also validated both that customers were willing to engage with the assistant and that those who did would sustain engagement in surprisingly high numbers all the way through the closing skill (logical end of a conversation). The results earned the team further investments into the initiative, additional tests, and opportunities to influence roadmaps of other teams in our department with integrations that would considerably level up the capabilities and utility of the assistant.

Scaling & Teambuilding

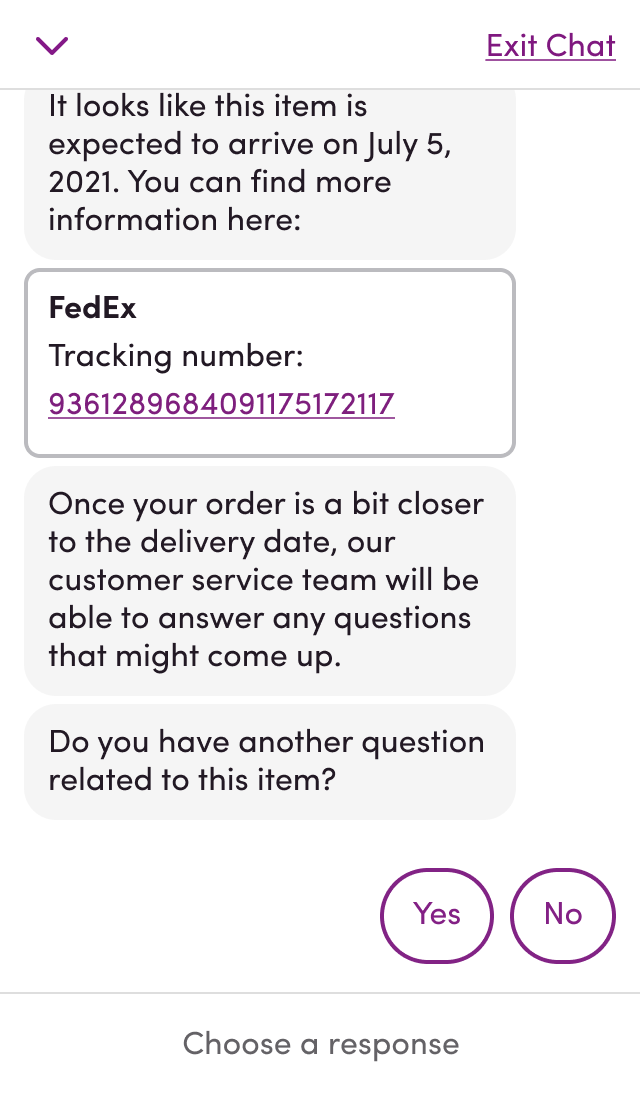

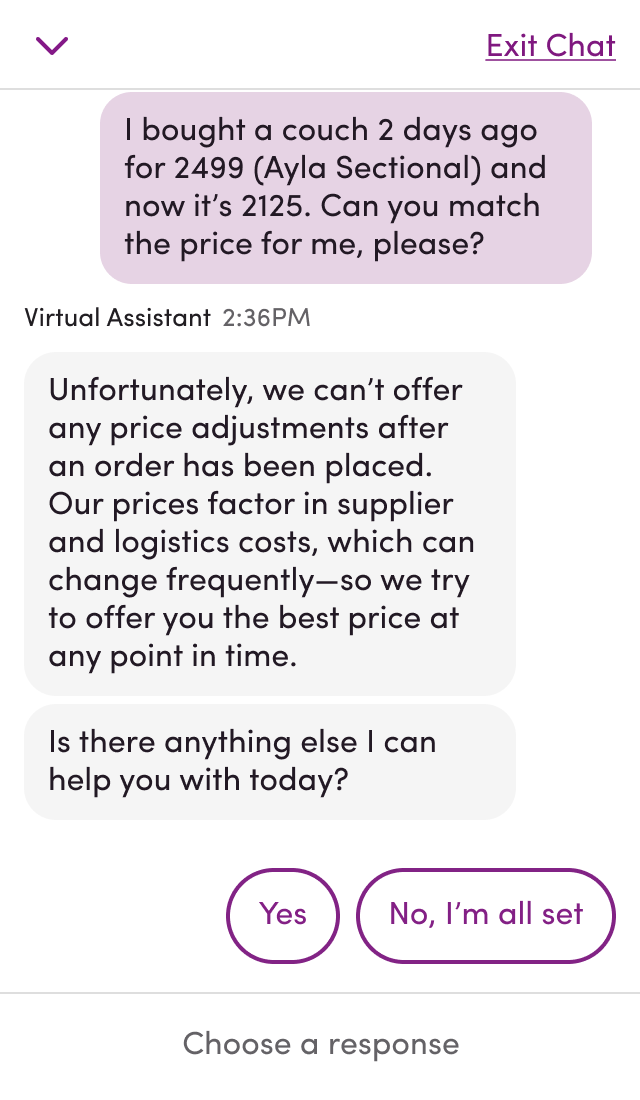

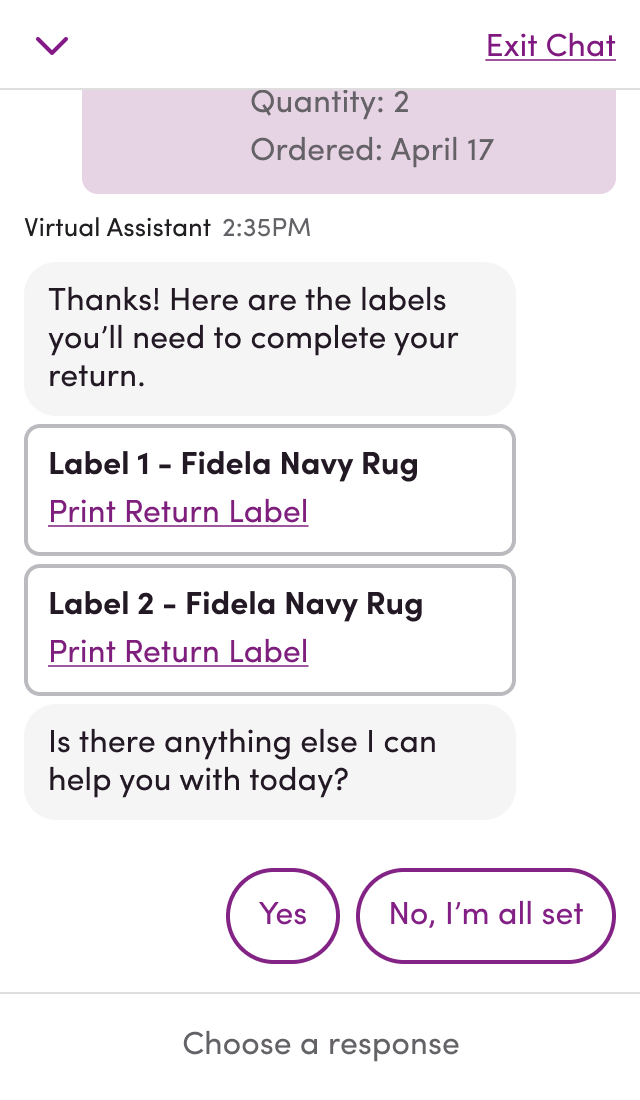

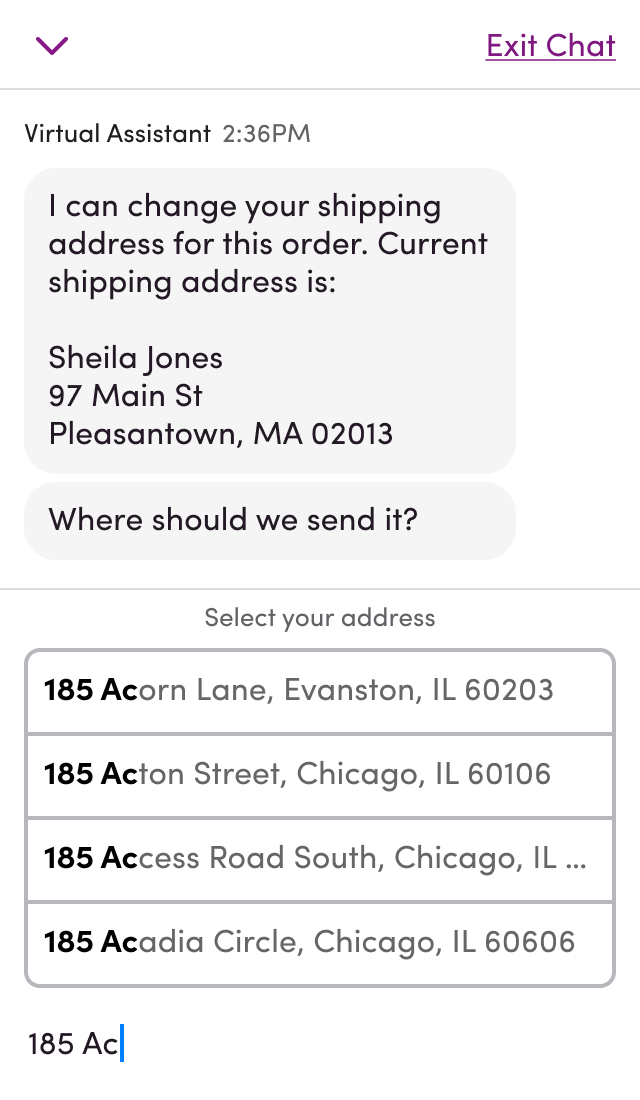

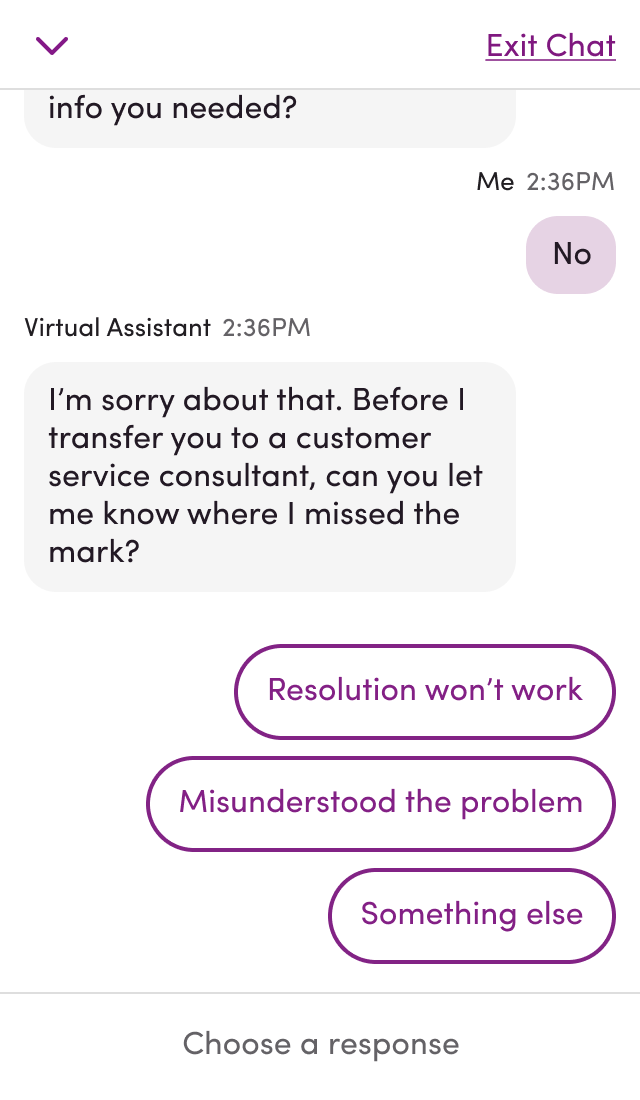

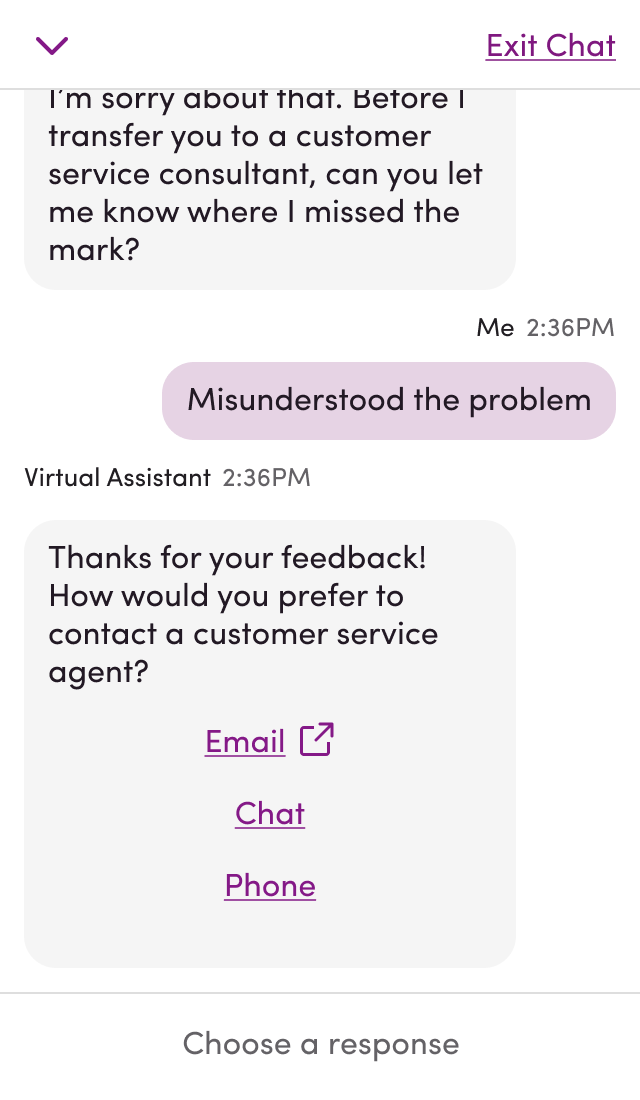

Over time, I designed features large and small and touched on just about every facet of the virtual assistant. I put together end-to-end plans for entire workflows like cancellation, shipping address change, and explored limits of conversational UI for reporting damaged items, a very complex workflow in service. I worked up new skills like item selection, transfer to live help from both customer’s and agent’s perspectives, pre-filled options at the start of the conversation personalized to the customer’s account & order history, and feedback loops for negatively rated interactions. I worked closely with Tara, a product manager, to create more specific answers and tailored resolutions for multifaceted intents like order status, which can vary widely depending on factors like latest supplier updates, product category, where an order is in its lifecycle, and customers’ unique motivations. I collaborated with Pasha, a director, to outline concept videos that promoted the product and marketed its potential to other departments at the company. I introduced product cards, and preview cards for informational resolutions that linked to pages like our help center articles, carriers’ tracking pages, and return labels. I helped recruit Mark and Brian, two designers, Maureen, a content strategist, and Youme, a researcher to take on the bulk of the work involved in scaling the product. I also oversaw the work once they hit the ground running, did a fair bit of testing & QA, and drafted research plans and activities for intents performing below our expectations.

I enjoyed designing conversational UI because the constraints of the medium forced me to become a ruthless editor. What is simple enough to be achievable? What combination of conversational and visual UI works best at any given moment? What integrations are even worthwhile? All engaging problems to resolve. And I learned a bit about state of the art in natural language processing. ▨